|

I am a ELLIS PhD student at Marcus and Anna Rohrbach's Multimodal AI Lab. I am co-advised by Hervé Jégou. Previously, I was a research engineer on efficient deep learning at Huawei Zurich. I graduated with an MSc Machine Learning from University College London, where I was advised by Timoleon Moraitis and Pontus Stenetorp. Previously, I interned as a Software Development Engineer in Amazon Web Services, and obtained a BSc Theoretical Physics from UCL. |

|

|

I'm interested in multimodal representation learning: improving efficiency and reliability using adaptable networks with adjustable compute budgets, and using more contextualised representations for sequential decision making tasks. |

|

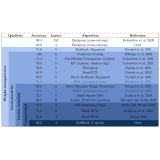

Adrien Journé, Hector Garcia Rodriguez, Qinghai Guo, Timoleon Moraitis ICLR notable-top-25% (spotlight), 2023 arXiv / code / talk We train deep ConvNets with an unsupervised Hebbian soft winner-take-all algorithm, multilayer SoftHebb. It sets SOTA results in image classification in CIFAR-10, STL-10 and ImageNet for other biologically plausible networks. SoftHebb increases biological compatibility, parallelisation and performance of state-of-the-art bio-plausible learning. |

|

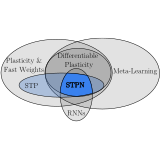

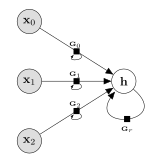

Hector Garcia Rodriguez, Qinghai Guo, Timoleon Moraitis ICML, 2022 arXiv / code / talk / poster / slides STPN is a recurrent neural network that improves Supervised and Reinforcement Learning by meta-learning to adapt its weights to the recent context, inspired by computational neuroscience. Additionally, STPN shows higher energy efficiency in a simulated neuromorphic implementation, due to its optimised explicit forgetting mechanism. |

|

|